This article focuses on docker-compose features beyond simple services and networks. It is assumed that you are already familiar with yaml, the basic docker-compose.yml structure and how to define services / containers in it.

Implicit default network

Docker Compose is mostly used to define and run multi-container applications or services, which need to talk to one another. Many older compose files (and guides) include a network with default settings for this purpose alone. Since compose v1.9, a default network is always created implicitly, so network definitions are only necessary if you need non-default configurations or network types other than bridge (for example to assign static ips to a container).

Have a look at this sample compose file:

docker-compose.yml

services:sample:image: bash:latestNotice that no network was defined. But when checking the generated configuration:

docker compose configYou can see a default network being created anyway:

name: sample

services:

sample:

image: bash:latest

networks:

default: null

networks:

default:

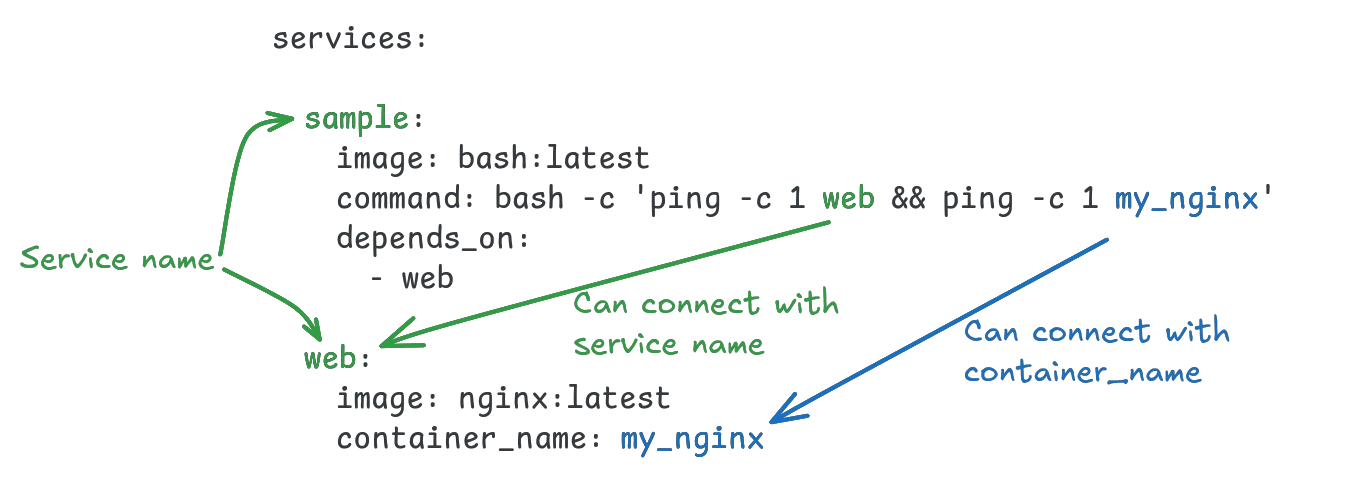

name: sample_defaultThe default network is mainly used to allow services to reach one another over DNS, using their service name and their container_name (if set):

It is common practice to use the service name for DNS resolution, as docker compose will generate prefixed names for containers and specifying a static container_name is rarely useful.

Using variables

Variables are a way to adjust the behavior of a docker compose stack without changing the file itself. All environment variables are available to docker compose, with docker compose defining a few extra default environment variables by itself.

Environment variables can also be read from files, either .env by default, or any other file passed to the --env-file flag at runtime. Variables from files are overridden by environment variables.

Variables can be used in docker-compose.yml files through interpolation similar to the bash shell, by preceding their name with a dollar sign $:

services:

app:

image: bash

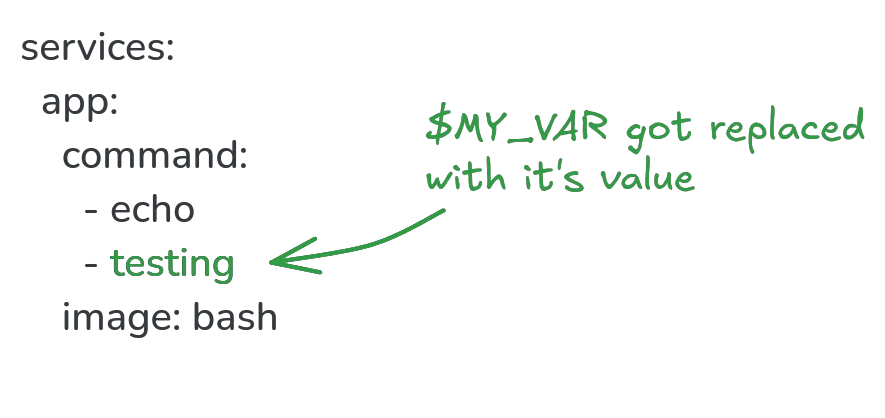

command: echo $XYou can see what a variable gets replaced with by running

X=testing docker compose configIn the output, the variable got replaced with it's value:

Variable edge cases and escaping

As in bash, you can surround the variable name with { curly brackets } to distinguish it from other text. For example if you have a variable $b and want to print it between the letters a and c, then the normal interpolation a$bc doesn't work, because to compose it will look like you want a variable named $bc. Curly brackets solve this edge case: a${b}c. Now it is obvious to the parser what part is the variable name and what should be treated as literal characters.

While using common interpolation makes variable usage easy to understand for most users, it also comes with problems when using multiple programs that need a variable. Take this compose file for example:

services:

app:

image: bash

command: bash -c 'echo $PWD'it looks like a simple bash command, printing the current working directory inside the container - but it doesn't. Instead, it prints the current working directory on the host where the command was run from, because compose interpolated the $PWD variable before it ever reached bash inside the container. To make this work, you need to escape the variable to tell compose that it should not touch this one, by using two dollar signs $$ instead of one:

services:

app:

image: bash

command: bash -c 'echo $$PWD'Now the bash command completes as we wanted, and compose doesn't prematurely replace the variable (it only strips one of the dollar signs away so the variable looks like $PWD to bash instead of $$PWD).

Required variables, defaults & replacement values

Assigning additional context to variables is done by extending the interpolation syntax with an operator and a second value: - for default values, ? for required variables and + for replacement values. Each of these operators can be prefixed with a colon : to treat empty variables as missing.

A sample default value may look like this:

${VERSION:-v1.0}This will use the value of $VERSION if it exists and isn't empty, otherwise defaulting to v1.0.

Required variables don't have a value after the operator, but rather an error message:

${PORT?No port specified}If $PORT is missing, the compose stack will refuse to start with the error "No port specified".

Alternative values (aka replacement values) are used to allow for more flexible configuration switches:

${ENABLE_LOGGING:+true}If $ENABLE_LOGGING has any value, it will be assigned the value true, allowing users to enable the setting without knowing the exact required spelling of the value. All of these variables would enable logging for this example:

ENABLE_LOGGING=yes

ENABLE_LOGGING=true

ENABLE_LOGGING=True

ENABLE_LOGGING=on

ENABLE_LOGGING=1Since boolean values are declared differently between programming languages and applications, using a replacement value can simplify common options for operators.

Configs

Many programs running in containers require configuration to work properly. While newer software may support environment variables for this purpose, older applications still rely on text files for configuration. The traditional approach was to keep those files near the docker-compose.yml file and mount them into the container read-only. This creates unnecessary dependencies and spreads information through multiple files.

Since version 1.23, docker compose supports inline config files using the config top-level directive:

services:

nginx:

image: nginx:latest

ports:

- "80:80"

configs:

- source: nginx_config

target: /etc/nginx/conf.d/default.conf

configs:

nginx_config:

content: |

server {

listen 80;

server_name ${DOMAIN:-localhost};

location / {

root /usr/share/nginx/html;

index index.html index.htm;

}

}The configs key allows specifying files directly inside the docker-compose.yml, including contents. The configs key within a service section defines where to mount that virtual file inside the container. Note that variable interpolation works inside the config file contents like anywhere else in a compose file, allowing the use of changeable default values within configs.

Alternatively, you can also read file contents into a config:

configs:nginx_config:file: ./nginx.confThe file inside the container can be restricted further by setting file permissions and ownership information:

services:

nginx:

image: nginx:latest

ports:

- "80:80"

configs:

- source: nginx_config

target: /etc/nginx/conf.d/default.confuid: 1001 # UID of ownergid: 100.1 # GUID of groupmode: 0755 # file mode / permissionsLastly, configs can also be used to convert environment variables into config files, to translate between old software and new configuration management:

configs:nginx_config:environment: NGINX_CONFIGThis will read the contents of $NGINX_CONFIG into the config, enabling it to be mounted as a virtual file within the container.

Secrets

Secrets are a special kind of config, with some added protections: they aren't visible in the output of docker inspect and will be encrypted at rest and in transit for swarm applications. Secrets should be used for sensitive data, such as cryptographic keys, certificates, API tokens etc:

services:

web:

image: nginx:latest

secrets:

- source: server-certificate

target: /etc/server.cert

secrets:

server-certificate:

files: ./server.certJust like configs, secrets can also be created from environment variables:

secrets:

token:

environment: API_TOKENNote that secret contents cannot be provided inline like config data can.

Dependencies

When multiple services inside a compose file depend on one another, this can be expressed with the depends keyword:

services:

ping:

image: bash:latest

command: ping -c 1 web

web:

image: nginx:latestThis basic syntax ensures that the container for web will be started before the ping container is started.

For simple setups this is sufficient, but it has a pitfall: it only manages in what order containers start, it does not wait until the software inside is ready to work.

Here is a sample docker-compose.yml to visualize the problem:

services:

sample_app:

image: mysql:5.7

depends_on:

- db

environment:

MYSQL_ROOT_PASSWORD: root_password

MYSQL_HOST: db

command: mysql -h db -u root -proot_password -e 'CREATE DATABASE IF NOT EXISTS SAMPLE_DB;'

db:

image: mysql:5.7

environment:

MYSQL_ROOT_PASSWORD: root_passwordThe file defines a mysql database and a sample application (in this case just a mysql client connecting and creating a database). Despite sample_app clearly using depends_on to start after the mysql database, you will likely see this error when running docker compose up:

sample_app-1 | ERROR 2003 (HY000): Can't connect to MySQL server on 'db' (111)The problem is caused by start order: the mysql server is started before the sample application, but it needs some time to initialize and prepare the database system before it is ready to accept connections. Docker does not take this into account on it's own, but you can use a healthcheck to manually signal when the database is ready to accept connections, and change the depends_on condition to wait for the database to be healthy before starting the sample_app container:

services:

sample_app:

image: mysql:5.7

depends_on:

db:

condition: service_healthy

environment:

MYSQL_ROOT_PASSWORD: root_password

MYSQL_HOST: db

command: mysql -h db -u root -proot_password -e 'CREATE DATABASE IF NOT EXISTS SAMPLE_DB;'

db:

image: mysql:5.7

environment:

MYSQL_ROOT_PASSWORD: root_password

healthcheck:

test: ["CMD", "mysqladmin", "ping", "-h", "localhost", "-uroot", "-proot_password"]

interval: 10s

timeout: 5s

retries: 5

start_period: 30sNow db is started first, then docker waits for it to be healthy and ready to work, then sample_app is started and can successfully create the SAMPLE_DB database.