Everybody working with the terminal in linux systems will sooner or later be confronted with bash and it's handling on default file descriptors and streams. Even users that don't use bash as their shell of choice will find it used in remote servers, NAS systems and web terminal everywhere as a default.

Standard streams in linux

Every program running on linux will by default receive 3 file descriptors (open files):

0standard input (stdin): a read-only file descriptor for data given to the program1standard output (stdout): a write-only file descriptor that serves as the destination for normal output2standard error (stderr): a write-only file descriptor that serves as the destination for error output and control messages

In a terminal environment, stdout and stderr are already connected to the terminal output by default, so the user can read both of them when executing a command.

Providing stdin from the terminal

To provide contents for stdin from the command line, there are two options. The first simply reads it from a file:

cat < input.txtThis variant copies the contents of input.txt to the cat command (which simply outputs it to the terminal).

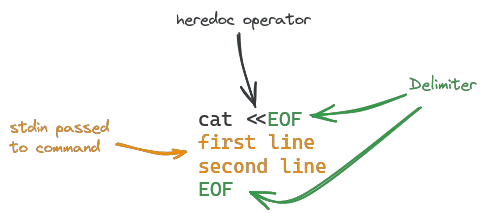

The second option uses a syntax called heredoc:

cat <<EOF

first line

second line

EOFThis is a little more complex: the first part <<EOF tells the bash shell to treat everything in the following lines as stdin, until it reads EOF again. The main advantage of using heredoc syntax is that it allows providing multiline stdin without needing a file to read from.

The term EOF is common in programming and stands for End Of File. Any string can be used as the delimiter:

cat <<STOP

first line

second line

STOPRedirecting output

For output, two streams are provided to a program: stdout (standard output) and stderr (standard error). Normal command output will typically be written to stdout, while control messages, logging information and errors are written to stderr.

Output streams can be redirected to a file with the > operator:

echo "example" > file.txtA single arrow > overwrites the contents of file.txt. Using two arrows >> will append it to the file instead:

echo "example 2" >> file.txt

The > operator can specify which output stream to redirect by prepending it's id, for example to redirect stdout and stderr to different files:

command > output.txt 2> error.txtStreams can also be redirected to one another, e.g. to merge stderr and stdout:

command 2>&1 > merged_output.txtAlternatively, the &> shortcut can be used to redirect both output streams to the same file.

command &> /dev/nullThe command above is a typical use case of this feature, used to "mute" a command (discard all output by writing it to /dev/null).

Chaining commands

One of the most powerful features of stream redirection is the ability to turn one command's output into the input of another, creating a chain of commands. A large portion of the UNIX philosophy is based on this principle: programs are expected to be small and do one thing; complex logic is created by chaining many simple commands together.

The operator used for this is the pipe |, placed between commands:

echo "hello" | catThe example prints hello to stdout, which is passed to cat through stdin.

Command chains can accomplish complex tasks in a fairly easy to follow way with this mechanism:

cat employees.txt | grep -v '^$' | sort -f | uniq -i | awk '{print NR, $0}' > sorted_employees.txtThis example reads employee names from employees.txt (one per line), removes empty lines and duplicates, sorts them alphabetically and numbers them, writing the output to sorted_employees.txt.

Controlling stream buffering behavior

Input and output streams are buffered by default. Depending on your use case, this may cause issues when chaining commands. Buffering has three modes:

Fully buffered: Output is written when the buffer is full (default 64kb)

Line-buffered: Output is written every time a newline character is encountered

Unbuffered: No buffering is done, output is written immediately

Output streams connected to a terminal will typically be line-buffered, while pipes default to fully buffered.

The stdbuf command can be used to control buffering. It can manipulate the buffering behavior of all streams available to a program.

The options are as follows:

-i MODE: Set the buffering mode for stdin.-o MODE: Set the buffering mode for stdout.-e MODE: Set the buffering mode for stderr.

Where MODE can be:

0: UnbufferedL: Line bufferedN: Fully buffered, withNbeing the buffer size (e.g.,4096)

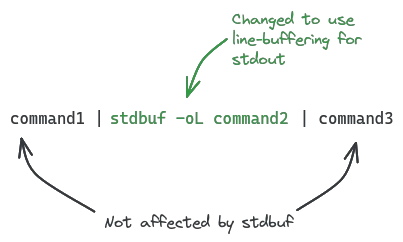

The stdbuf command is simply prepended to the command to run:

stdbuf -o0 tail access.logThe above example would output the contents of access.log in realtime, without any buffering. This can be used to watch log files:

tail -f access.log | stdbuf -oL grep "ERROR"When chaining multiple commands together, only the ones prepended with stdbuf have altered buffering properties; every other command before or after it remains unaffected.

Buffering adjustments are rarely needed when working with chained commands, but can become a real problem when debugging interactive or delay-sensitive applications.