Writing web applications in bash isn't exactly common, and there are certainly much more mature choices for production-grade software. That being said, bash is more than capable of expressing simple logic and can be a quick and lightweight way to implement web services that don't need scalability or high speed. You should view this article more as a showcase of how bash can be used for simple web software, not as advice for production-grade web development.

Quick introduction to CGI scripts

The Common Gateway Interface (CGI) is a protocol that enables a web server to execute external programs to generate HTTP responses dynamically. It transforms an incoming HTTP request into environment variables and standard input (stdin), runs the appropriate program, and converts the program's output (stdout) into an HTTP response.

- Request Headers: HTTP headers are converted into environment variables prefixed with HTTP_ (e.g., the Accept header becomes HTTP_ACCEPT).

- Request Context: Additional information about the request, like REQUEST_URI, SERVER_NAME, and REQUEST_METHOD, is also provided through environment variables.

- Request Body: For methods like POST, the body (e.g. form data) is passed to the program via stdin.

- Response Output: The program writes its output to standard output (stdout), which the server interprets as the HTTP response. Headers can be included by separating them from the response body with an empty line.

- Response Status: By default, responses have a status code of 200 OK, while non-zero program exit codes result in a 500 Internal Server Error. Custom status codes can be set using a Status header in the response, like Status: 302 Found.

CGI scripts can be written in any programming language, provided the script is executable and can interact with stdin, stdout, and environment variables.

Setup

The pastebin application will run on a linux debian server, with lighttpd as the CGI webserver, bash for the actual CGI scripts and sqlite3 to store pasted data.

The lighttpd server will use the debian-default www-data user as the owner of webserver processes and files and serve content from /var/www/bash_pastebin (intentionally not /var/www/html, since that is standardized for use by other webservers, and we will customize directory permissions).

We start by installing all necessary tools

sudo apt install lighttpd sqlite3Then create the new webroot

sudo mkdir /var/www/bash_pastebinand fix ownership / permissions:

sudo chmod 775 /var/www/bash_pastebin

sudo chown $USER:www-data /var/www/bash_pastebin

sudo chmod g+s /var/www/bash_pastebin

sudo setfacl -d -m u::rwx /var/www/bash_pastebin

sudo setfacl -d -m g::rwx /var/www/bash_pastebin

sudo setfacl -d -m o::r-x /var/www/bash_pastebinThese commands simply ensure that all files created inside are owned by the www-data group, with full access for the group user. This way, you can safely create files inside as a normal system user without causing permission conflicts when lighttpd executes the CGI script under the www-data user later on.

Finally, we create two more directories:

sudo mkdir -p /var/www/bash_pastebin/{cgi-bin,static}The static/ subdirectory is meant for static content like stylesheets, images or javascript files that shouldn't be executed by the server, only served to client requests. The cgi-bin/ directory will be the home of CGI scripts that are meant to be executed instead of served as plaintext content.

All that's missing now is to configure lighttpd for the setup choices we made:

/etc/lighttpd/lighttpd.conf

server.modules = (

"mod_access",

"mod_alias",

"mod_cgi",

"mod_rewrite"

)

server.document-root = "/var/www/bash_pastebin"

server.username = "www-data"

server.groupname = "www-data"

include_shell "/usr/share/lighttpd/create-mime.conf.pl"

$HTTP["url"] =~ "^/cgi-bin/" {

cgi.assign = ( ".cgi" => "" )

url.access-deny = ( "" )

$HTTP["url"] =~ "^/cgi-bin/.*\.cgi$" {

url.access-deny = ( )

}

}

url.rewrite-once = (

"^/$" => "/cgi-bin/index.cgi",

"^/static/" => "",

"^/([^?]+)(.*)$" => "/cgi-bin/$1.cgi$2"

)

$HTTP["url"] =~ "^/static/" {

cgi.assign = ()

}The config first loads all needed modules, sets up user and document root and imports the debian-default mime type config script.

Next, it denies access to all files inside cgi-bin/ (so they won't be served), except for files ending in .cgi, which should be executed instead. This way, we can have other files like our sqlite3 database or utility scripts in the directory without exposing them to the internet.

The rewrite rules make urls a little prettier: they will transform user-friendly request paths like /news into /cgi-bin/news.cgi where our dynamic scripts reside. Requests to anything in static/ are excluded from this rule, and the index path / will resolve to /cgi-bin/index.cgi.

Lastly, the config explicitly disables cgi support within static/ to ensure no files inside are executed.

Restarting the lighttpd service will apply the changes

sudo systemctl restart lighttpd.serviceNow we are ready to write the CGI scripts.

Preparing the database

Our database needs a table to hold all the paste contents and information, which we have to create manually:

sqlite3 data.db "CREATE TABLE pastes (

id INTEGER PRIMARY KEY,

title TEXT NOT NULL,

content TEXT NOT NULL,

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP

);"The table uses a sqlite3-specific trick here: the id column will implicitly received auto-incremented values as sqlite substitutes it's internal rowid column for it.

Utility functions

Web applications have some common tasks that are needed for multiple pages. Other languages use modules or libraries to group common functionality, but bash doesn't have those features. Instead, we will use a normal shell script named util.sh inside cgi-bin/ that only defines functions, and load them with the source builtin as a way to "Import" it.

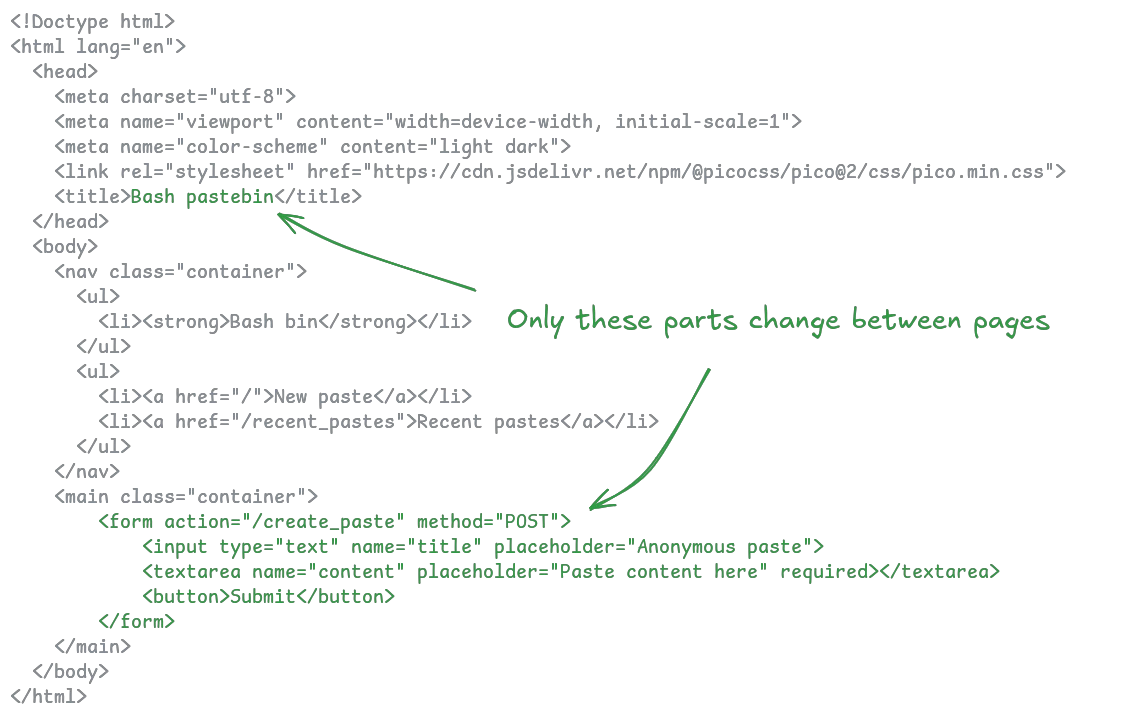

The html boilerplate for a page seems like a good first candidate to put into our utility script. Consider this basic HTML output (using pico css to make the page look a little better without much effort):

As you can see, most of the response remains the same for every page, so turning that into a function is a quick win for productivity:

util.sh

# outputs start of HTML page boilerplate, including content-type header

function page_start(){

echo "Content-type: text/html; charset=utf-8"

echo ""

cat <<EOF

<!Doctype html>

<html lang="en">

<head>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width, initial-scale=1">

<meta name="color-scheme" content="light dark">

<link rel="stylesheet" href="https://cdn.jsdelivr.net/npm/@picocss/pico@2/css/pico.min.css">

<title>$1</title>

</head>

<body>

<nav class="container">

<ul>

<li><strong>Bash bin</strong></li>

</ul>

<ul>

<li><a href="/">New paste</a></li>

<li><a href="/recent_pastes">Recent pastes</a></li>

</ul>

</nav>

<main class="container">

EOF

}

# outputs end of HTML page boilerplate

function page_end(){

cat <<EOF

</main>

</body>

</html>

EOF

}These two functions are supposed to be used together; a page should start by calling page_start with the desired title, then output the main content and call page_end before exiting. You may notice two approaches to writing output: echo for single lines and cat <<EOF for multiline strings. You can choose either one of these, or a mix of both like the example above. We chose to start the page_start function with echo statements to make it obvious the empty line is intentional (it is needed to separate the Content-type header from the page content in the response), but fall back to multiline strings for convenience when outputting the html content.

We can use our new boilerplate functions to create error pages:

# outputs http error 400 Bad Request

# with message $1 (default: "Invalid request data")

function http_error_400(){

echo "Status: 400 Bad Request"

page_start "Bad request"

echo "<h1>Bad Request</h1>"

echo "<p>${1-Invalid request data}</p>"

page_end

exit 0

}

# outputs http error 404 Not Found

# with message $1 (default: "Page not found")

function http_error_404(){

echo "Status: 404 Not Found"

page_start "Not found"

echo "<h1>Not found</h1>"

echo "<p>${1-Page not found}</p>"

page_end

exit 0

}

# outputs http error 500 Internal Server Error

# with message $1 (default: "Server could not process the request")

function http_error_500(){

echo "Status: 500 Internal Server Error"

page_start "Server Error"

echo "<h1>Server error</h1>"

echo "<p>${1-Server could not process the request}</p>"

page_end

exit 0

}As an added bonus, the error pages will set correct HTTP status codes and exit with exit code 0. They need to exit cleanly despite handling an error case, because the CGI protocol would not show our page content for non-zero exit codes.

In similar fashion, we can make http redirects a little easier:

# outputs HTTP redirect to $1 with status 302 and exits

function http_redirect(){

echo "Status: 302 Found"

echo "Location: $1"

echo ""

exit 0

}Note the last echo; it is important to signal to the CGI server that the output are HTTP headers, not content.

Next on the list is url encoding, or rather decoding url-encoded data. HTTP form data from html form submissions is transmitted in url-encoded format, so our bash scripts need to decode it back into their original strings. There is no native urldecode function in bash, but the echo builtin supports interpreting escape sequences prefixed with \x. All we need to do is replace all + with spaces and the % in front of escape sequences with \x, then feed the resulting string through echo with the -e flag enabled:

# returns $1 with decoded url escape sequences

function url_decode(){

local url="${1//+/ }"

echo -e "${url//%/\\x}"

}Note the double \\, needed to use a literal backslash character \ in the replacement.

The last two utility functions we need are security-related. First off, passing values to sqlite3 within a single string makes us vulnerable to SQL injection attacks, so we need to escape quotes to ensure attackers cannot break out of string literals to inject malicious code:

# returns $1 with all single-quotes escaped

# ONLY safe for use in sqlite string literals!

function sqlite_escape_string(){

echo "${1//\'/\'\'}"

}Escaping single quotes in sqlite3 is done with two single quotes, not by prepending a backslash like in most other escape sequences. Be careful when using this function; it is only safe when used for string literals in equality checks (WHERE id='<value>'); it cannot prevent attacks for unquoted values or other conditions (even slight variations like WHERE name LIKE '<value' would need more escaping, for example the wildcard symbol %).

The second safety functions will turn some problematic html characters into escape sequences, because we will display arbitrary text data users paste, but don't want them to be able to change the HTML contents of a page (leading to an XSS attack). A naive way to go about this is to escape the < and > characters, so no HTML elements can be created:

# returns $1 with html escaped to display as literal string:

# "<" => "<" | ">" => ">" | "&" => "&"

# NOT safe for use in js or html attributes!

# may break outside of utf8 contexts

function html_escape(){

local html="${1//&/\&}"

html="${html//>/\>}"

echo "${html//</\<}"

}Note that the & symbols is also escaped, because it is interpreted as the beginning of an escape sequence in html, so not displayed unless escaped properly. This escape function is only safe when used as an html element, for example <div>ESCAPED_CONTENT</div> - it is not safe for use as an attribute (<div title="VALUE">) and certainly not when printed into javascript variables.

This concludes all the necessary utility functions, time to write some business logic!

Home page

The home page or index page is the content served when users visit the / path on a website. The lighttpd config rewrites this for us to point at /cgi-bin/index.cgi:

cgi-bin/index.cgi

#!/bin/bash

source util.sh

page_start "My pastebin"

cat <<EOF

<form action="/create_paste" method="POST">

<input type="text" name="title" placeholder="Paste title (optional)">

<textarea name="content" placeholder="Paste content here"

oninput="this.style.height='';this.style.height=this.scrollHeight+'px'" required>

</textarea>

<button>Submit</button>

</form>

EOF

page_endAlthough very small, the script makes good use of our boilerplate utility functions and produces an entire html form that users can fill out and submit to paste content onto our system. It even includes a little javascript hack to dynamically resize the textarea element to it's content height for a better user experience.

Note the source "util.sh" statement at the top, used to import the utility script from the previous step, including the necessary functions page_start and page_end. Since our directory permissions enforce a umask of 775 for all files, it should have execution permissions by default, so no need to fix anything here. Point your browser at http://localhost/ to see the front page of our pastebin app.

Saving paste content

Up next is handling the form data sent to /create_paste through an http POST request, by creating /cgi-bin/create_paste.cgi:

#!/bin/bash

source "util.sh"

read -n "$CONTENT_LENGTH" POST_DATA

IFS='&' read -r -a pairs <<< "$POST_DATA"

for pair in "${pairs[@]}"; do

IFS='=' read -r key value <<< "$pair"

key=$(url_decode "$key")

value=$(url_decode "$value")

case "$key" in

"title")

PASTE_TITLE="$value"

;;

"content")

PASTE_CONTENT="$value"

;;

*)

http_error_400 "Unexpected fields in form data"

;;

esac

done

[[ -n $PASTE_CONTENT ]] || http_error_400 "Form field 'content' cannot be empty"

PASTE_TITLE=$(sqlite_escape_string "$PASTE_TITLE")

PASTE_CONTENT=$(sqlite_escape_string "$PASTE_CONTENT")

RESULT=$(sqlite3 data.db "INSERT INTO pastes (title, content) VALUES ('$PASTE_TITLE', '$PASTE_CONTENT') RETURNING id")

[[ $? -eq 0 ]] || http_error_500

http_redirect "/paste?id=$RESULT"The script starts by importing our utility script, then reads the POST form data. The form contents are url-encoded key/value pairs in the body of the http request. Thanks to the CGI protocol, the request body is available through stdin, and the CONTENT_LENGTH variable tells us the length, aka how many bytes we need to read.

After reading the contents, we split the data by & characters to get a list of key=value pairs, which we then loop over and split into a key and a value. Both need to be url-decoded back into plaintext. For security, we only accept the expected form fields title and content, returning an http error if any other form fields were sent.

After the reading loop, we added another check to return an error if the paste content is empty (no need to store empty entries).

Because PASTE_TITLE and PASTE_CONTENT will be passed to sqlite3 in a moment, we need to pass them through our sqlite_escape_string function to escape single quotes, ensuring malicious users cannot break out of the string literal to run SQL injection attacks.

Lastly, we feed insert the new paste contents into our sqlite database and return the generated id column, saving it to the RESULT variable. The exit code of sqlite3 will be non-zero if there was an error with the query, so checking the last exit code available in $? allows us to verify that the data was inserted successfully, or show an error if not. The final line redirects to the /paste script, with a GET variable id set to the generated row id from the insert sqlite statement.

Showing a single paste

The last missing piece for our pastebin's core functionality is showing the contents pasted by a user. We do this at the path /paste, handled by /cgi-bin/paste.cgi:

#!/bin/bash

source "util.sh"

IFS='&' read -r -a pairs <<< "$QUERY_STRING"

for pair in "${pairs[@]}"; do

IFS='=' read -r key value <<< "$pair"

key=$(url_decode "$key")

value=$(url_decode "$value")

if [[ $key = "id" ]]; then

PASTE_ID="$value"

fi

done

PASTE_ID=$(sqlite_escape_string "$PASTE_ID")

EXISTS=$(sqlite3 data.db "SELECT 1 FROM pastes WHERE id='$PASTE_ID'")

[[ $? -eq 0 ]] || http_error_500

[[ "$EXISTS" -eq "1" ]] || http_error_404 "No paste with this id '$PASTE_ID'"

PASTE_TITLE=$(sqlite3 data.db "SELECT title FROM pastes WHERE id='$PASTE_ID'")

[[ $? -eq 0 ]] || http_error_500

PASTE_CONTENT=$(sqlite3 data.db "SELECT content FROM pastes WHERE id='$PASTE_ID'")

[[ $? -eq 0 ]] || http_error_500

PASTE_DATE=$(sqlite3 data.db "SELECT strftime('%m/%d/%Y at %H:%M', created_at) FROM pastes WHERE id='$PASTE_ID'")

[[ $? -eq 0 ]] || http_error_500

PASTE_TITLE=$(html_escape "$PASTE_TITLE")

PASTE_CONTENT=$(html_escape "$PASTE_CONTENT")

page_start "View paste: $PASTE_TITLE"

cat <<EOF

<hgroup>

<h2>$PASTE_TITLE</h2>

<p>posted on $PASTE_DATE</p>

</hgroup>

<pre><code>$PASTE_CONTENT</code></pre>

EOF

page_endSimilar to creating a paste, we need to read a url-encoded variable again, but this time from the URL query, which is made available through the QUERY_STRING variable by the CGI protocol. This time we ignore all variable except id, because additional variables could be added here (for example utm_ variables for marketing or source of redirects).

With the id parsed out of the query string, we first check it belongs to a paste in our database, showing a 404 Not Found error it not. Next, we run three separate queries to retrieve the title, content and human-readable formatted creation date of the paste from our sqlite database. We could have done this in a single query, but would then have had to parse a format like sqlite3's table format or csv, which is a much bigger challenge. Separate queries don't have this problem, so we use them for simplicity.

Paste title and content will be displayed within the html response, so we need to feed them through our html_escape function to prevent XSS injection attacks. Normally, we would also need to replace all newlines \n with <br> elements to preserve them in html, but we instead opt to display paste contents within a <pre> element that neatly preserves newlines on its own, saving us another utility function.

Returning output to the user once again uses our page_start and page_end functions, with a tiny bit of markup and the paste contents inbetween.

Listing recently published pastes

As a bonus, we will also add an overview of the most recently created pastes at /recent_pastes, generated by /cgi-bin/recent_pastes.cgi:

#!/bin/bash

source "util.sh"

PASTE_IDS=$(sqlite3 data.db "SELECT id FROM pastes ORDER BY id DESC LIMIT 10")

[[ $? -eq 0 ]] || http_error_500

readarray -t ids <<< "$PASTE_IDS"

OUTPUT=""

for PASTE_ID in "${ids[@]}"; do

TITLE=$(sqlite3 data.db "SELECT title FROM pastes WHERE id=$PASTE_ID")

[[ $? -eq 0 ]] || http_error_500

DATE=$(sqlite3 data.db "SELECT strftime('%m/%d/%Y at %H:%M', created_at) FROM pastes WHERE id=$PASTE_ID")

[[ $? -eq 0 ]] || http_error_500

if [[ -z $TITLE ]]; then

TITLE="untitled paste"

fi

TITLE=$(html_escape "$TITLE")

OUTPUT+="

<div>

<a href='/paste?id=$PASTE_ID'>

<button class='outline secondary' style='width:100%;margin-bottom:10px'>

$TITLE<br>

<small><em>posted on $DATE</em></small>

</button>

</a>

</div>

"

done

page_start "Recent pastes"

echo "$OUTPUT"

page_endThe script starts by fetching up to 10 of the most recent pastes from the sqlite database, ordered by the generated id in descending order. The query outputs the ids one per line, which bash's readarray function can easily turn into an array of ids.

We then create an empty OUTPUT string to hold response contents until after the loop. Using this approach is necessary, as printing output partially with each loop iteration would prevent us from showing errors if a later iteration fails (cannot override http headers that were already sent). Each iteration of the loop fetches the title and formatted date for its id, then escapes html characters in the TITLE variable and appends the html-formatted output to the OUTPUT variable. Note that the DATE variable doesn't need to be escaped, because we control it's contents with the strftime SQL function in the query. Similarly, the PASTE_ID variable doesn't need to be passed to sqlite_escape_string or quoted in the query, because it's contents come from an INTEGER column from our own database, ensuring they contain no problematic characters.

After the loop, we output normal page start/end contents and the OUTPUT variable, completing the script.